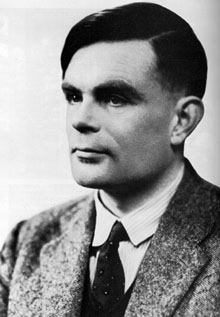

British mathematician Alan Turing, whose code-breaking helped the Allies win World War II, proposed in 1950 that we could consider computers intelligent when they successfully mimic human behavior, particularly conversation, in blind experiments. This has remained the Holy Grail of artificial intelligence research for over six decades. And despite incremental gains, this triumph remains just out of reach after all that time.

British mathematician Alan Turing, whose code-breaking helped the Allies win World War II, proposed in 1950 that we could consider computers intelligent when they successfully mimic human behavior, particularly conversation, in blind experiments. This has remained the Holy Grail of artificial intelligence research for over six decades. And despite incremental gains, this triumph remains just out of reach after all that time.I remembered this when my friend and fellow writer Jerry J. Davis recently reported his attempt at conversation with an AI simulator, Cleverbot. This program has a built-in learning heuristic, allowing it to increase in human-like behavior. According to tech writers, at a recent exhibition, it came within five points of a human control group in presenting realistic dialog. Dialog, perhaps, such as this example from its conversation with Jerry:

I considered interviewing Cleverbot myself, just to test how persuasive this “intelligent” system really is. I’ve done it before, and gotten some laughs, on a now defunct website. The machine tended toward non sequiturs, an appallingly brief attention span, and a fondness for answering questions with questions, like a newly minted psychologist desperate to keep the conversation going. It was funny, but not particularly enlightening.

But why shoot fish in a barrel?

A programmed heuristic always stumbles because computers reason in short numerical bursts. If new concepts fit the alphanumeric learning system, the heuristic can learn them. If they don’t, it can’t. We call this “deductive reasoning,” meaning that for any question, the answer is inherent in the premise. If all Greeks are bald, and Socrates is Greek, we need add nothing to the equation to say that Socrates is bald.

Humans, by contrast, think in images and abstractions. This is why mathematicians love histograms and flow charts, because to them, numbers signify something greater. We make connections, draw conclusions, and perform leaps of faith. We call this “inductive reasoning,” meaning the answer exceeds the question. Adam Smith’s theory of capitalism is not inherent in its numbers, yet human insight can supply the missing concepts.

Let me restate that briefly, because you probably missed the upshot: human reasoning works because it’s necessarily irrational.

Let me restate that briefly, because you probably missed the upshot: human reasoning works because it’s necessarily irrational.When we surpass obvious truths and take risks in our thinking, we can turn sparse, incomplete, or lopsided information into real, useful meaning. Mathematics, biology, physics, and all the “hard” sciences rely on the same human impulse to close the gap that enables art and philosophy. Sometimes this leads to false conclusions, as with medieval “medicine,” but it also lets us comprehend life in all its imperceptible grandeur.

Post-Enlightenment thinking has often assumed that humans are rational. It assumes we plug knowable facts into prepared slots. This is why much 19th Century philosophy tried to exclude literature and religion from serious discussion, because both rely on principles we cannot separate and test.

This train of thought largely ended when putatively “scientific” justifications led to the catastrophe of World War I. Yet it somehow persists in language studies. Noam Chomsky’s theory of Generative Grammar assumes that children learning their native language analyze grammar like little engines; computer scientists assume they can reproduce such concepts digitally.

This despite the fact that no significant evidence bolsters Generative Grammar. It perseveres because Chomsky, an excellent salesman, insists it should. Anyone who has ever raised a kid through the delicate language-learning years knows that children learn to speak in a much more hit-or-miss manner than Chomsky’s analytic approach would permit.

Do we really want a computer capable of this same irrational reasoning? We like computers’ ability to compile statistics, retain data, and search facts quickly and efficiently. A machine that goes off on an artistic tangent or becomes fixed on a dead-end idea, as humans often do, would only compound our difficulties. Personally, I like my machines dim and compliant, because I can transfer the data-crunching to them, and make my own meaning.

In Yiddish myth, a person of surpassing righteousness could build a Golem, a rudimentary human made from clay. This was possible because righteousness make that person similar to G-d. But because limited humans can never be as righteous as G-d, the Golem can never speak, because humans cannot invest our creations with souls.

In Yiddish myth, a person of surpassing righteousness could build a Golem, a rudimentary human made from clay. This was possible because righteousness make that person similar to G-d. But because limited humans can never be as righteous as G-d, the Golem can never speak, because humans cannot invest our creations with souls.These Hebrews probably never imagined they had prophesied the computer’s false goals. But perhaps we could take a lesson from them: thinking machines may not rebel against us, as in science fiction, but they could easily become useless. Let machines be machines. Humans, in all our flawed glory, can close the gaps for ourselves.

No comments:

Post a Comment